In addition to Weibo, there is also WeChat

Please pay attention

WeChat public account

AutoBeta

2024-11-17 Update From: AutoBeta autobeta NAV: AutoBeta > News >

Share

AutoBeta(AutoBeta.net)11/17 Report--

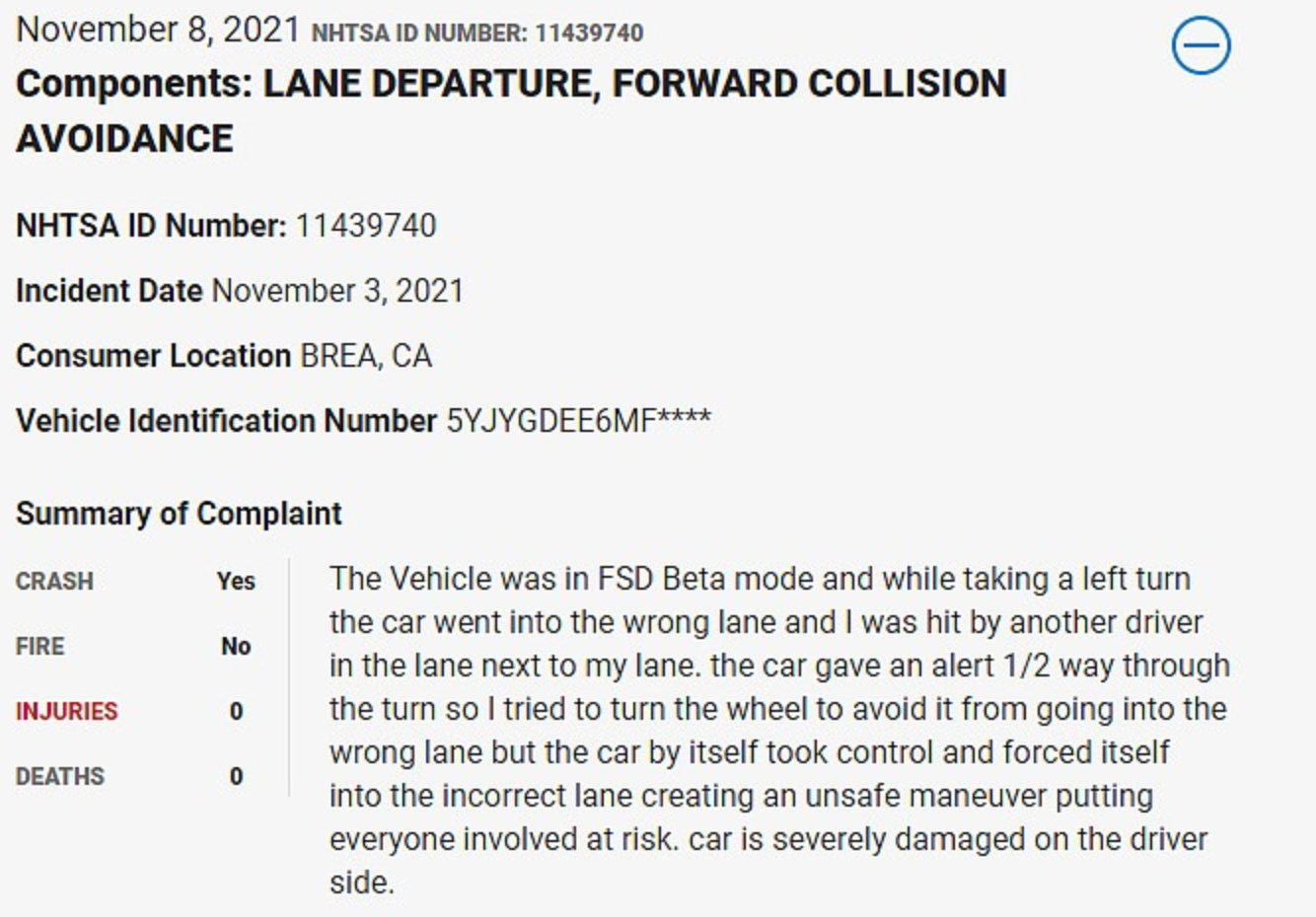

Recently, the National Highway Traffic Safety Administration (NHTSA) said it received a complaint about an accident report related to Tesla's "fully autonomous driving" (FSD) test function, according to media reports. About the complaint, on November 3, the car owner had a traffic accident when Tesla Model Y turned on FSD Beta (fully autopilot) function. Although the car was seriously damaged, fortunately, no one was injured in the accident.

According to the owner, the vehicle was in FSD Beta test mode at the time of the accident, and the vehicle was driving in the wrong lane when it was about to turn left. In the middle of the turn, the vehicle sounded an alarm, and the owner said it was also trying to automatically turn the steering wheel to avoid taking the wrong road, but the FSD system still forcibly controlled the vehicle, causing the vehicle to collide with another car. At present, Tesla has not responded to this matter.

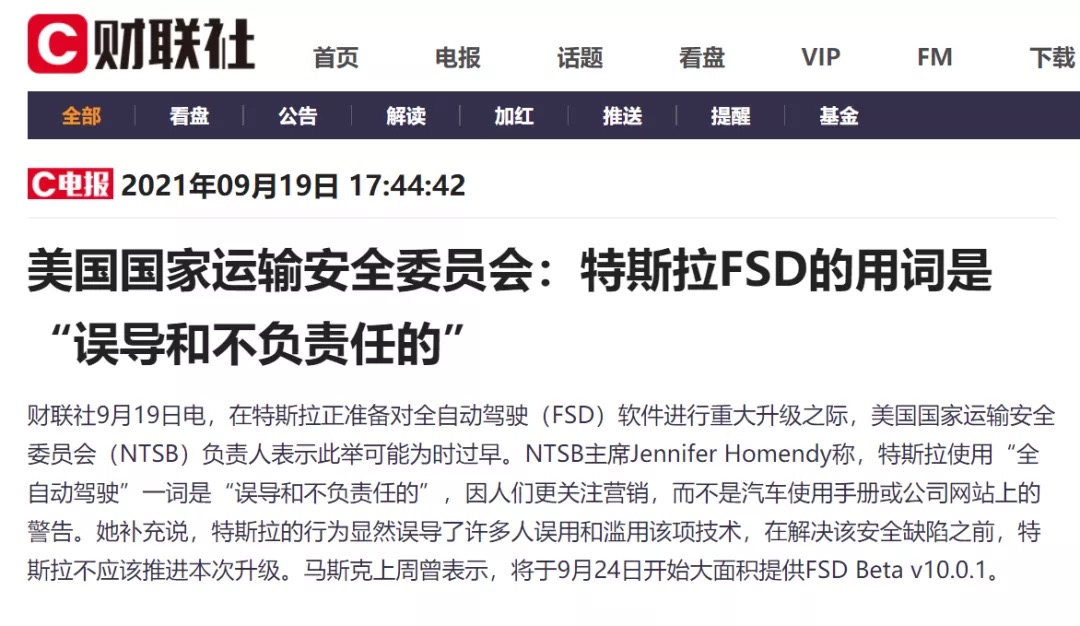

It is worth noting that Tesla's autopilot technology has always attracted much attention, but also there is a lot of controversy. Earlier, according to media reports, Tesla was preparing for a major upgrade of fully autonomous driving (FSD) software, the head of the US National Transportation Safety Board (NTSB) said: this may be too early. The main reason is that Jennifer Homendy, chairman of NTSB, believes that Tesla's use of the word "fully autonomous driving" is "misleading and irresponsible", because consumers pay more attention to marketing than the warning on the car user manual or the company's website. Tesla's behavior will mislead many people into misusing and abusing the technology, and Tesla should not promote this upgrade until the safety flaw is resolved.

According to incomplete statistics, in the past five years, the National Highway Traffic Safety Administration has investigated 25 accidents involving Tesla's auto-driving assistance system. Peter Knudson, a spokesman for the National Transportation Safety Board, once said: "We have been watching Tesla's new technology closely."

Of course, the vehicle has the function of "autopilot", which can indeed bring a lot of convenience to users in daily driving and reduce the driving burden of drivers to a certain extent. However, from the current point of view, the safety and reliability of "autopilot" is not very high, for some complex road conditions, the computer is not as timely as the human brain. Although the current technology research and development has reached a certain height, there are still many years to go before computers replace the human brain, and it is still difficult to achieve "fully autonomous driving" in a few years.

Although Tesla CEO Musk once said on Twitter that our goal for FSD is 1000% safer than ordinary human drivers. But for Tesla CEO Musk's goal, some netizens said: it may have been achieved in 99.99% of the cases are ten times more accurate than people, but once you encounter that unique 0.01%, it is a fatal accident. Some netizens also pointed out that the current stage of Tesla FSD should not be regarded as "fully autonomous driving", and car owners still need to be ready to take over vehicle control at any time.

Welcome to subscribe to the WeChat public account "Automotive Industry Focus" to get the first-hand insider information on the automotive industry and talk about things in the automotive circle. Welcome to break the news! WeChat ID autoWechat

Views: 0

*The comments in the above article only represent the author's personal views and do not represent the views and positions of this website. If you have more insights, please feel free to contribute and share.

© 2024 AutoBeta.Net Tiger Media Company. All rights reserved.